Realtime AI Drawing Canvas: What is real-time generation? and why it will change generative art and design in 2024?

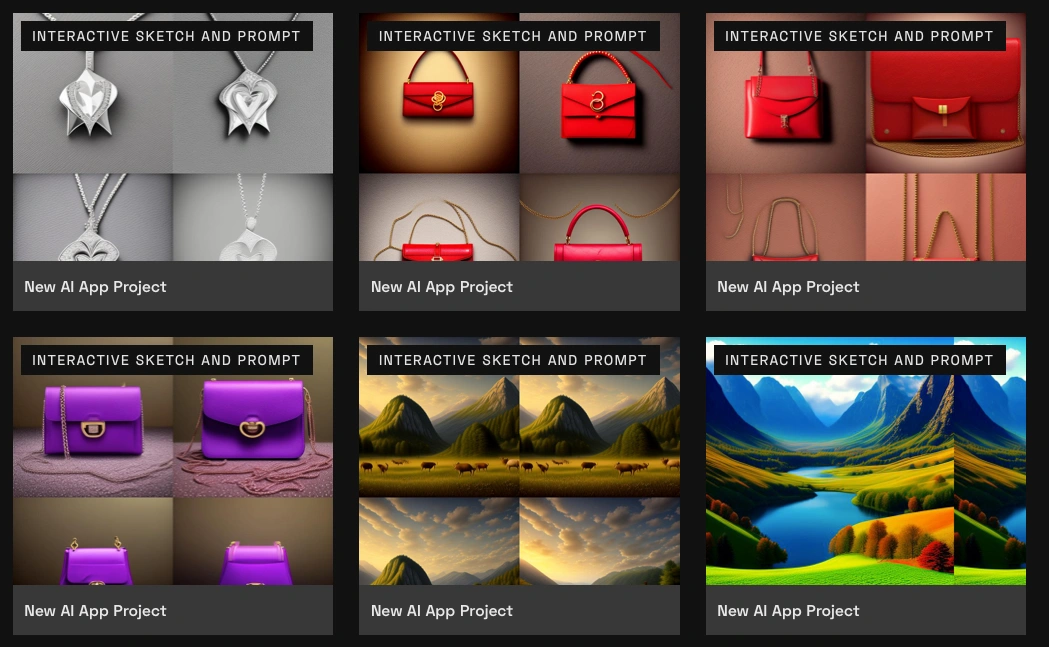

Real-time generation refers to a new wave of text-to-image models that can generate very fast, in about 1 second per image, or even in sub-seconds. Moreover, such models offer consistent generation, which means you can iterate in your generation in almost real time. You can add or remove words to your prompt and see immediately how that affects your image. Moreover, you can interactively sketch and see how your image change with every stroke you add.

Diffusion models have dominated image generations in the last two years. Diffusion models have been the generative AI technology underlying platforms like Dall-E, Midjourney, and Stable diffusion, and many other image generators. Although such models revolutionized image generations, they are notoriously slow. Each generated image has to go through many of what is called “inference” steps, that takes your prompt and start from pure noise through many iterations to generate an image. As a user, if you don’t like the outcome, you have to repeat this process many many times, manipulating the prompt, and playing with knobs that most likely doesn’t make sense to you. like “guidance scale”, “schedulers”, …etc, till you reach a satisfying image. Every time you need to change a single word in your prompt, you are likely to wait 30 seconds to see the effect of that change. Real-time generation is now here and is likely to change this user experience to something dramatically different.

This new wave of real-time image generation is based on what is called “Consistency Models”. This can reduce the number of steps needed in the diffusion process to just a handful of steps, or in some cases 1 step. Consistency models do that by somehow learning “shortcuts” to generate images from noises. The bottom line is that it works and it is here to change the way images are generated.

Slow Cooking vs Microwaving your Image generation: Pros and Cons of real-time generation:

Pros: Faster, Interactive, ultimate control, specially when connected with sketching tool to influence the generation

Cons: Potentially losing quality compared to traditional models.

The bottomline is, If you want to iterate fast on your idea, real-time generation is here for you. I imagine real-time generators would be an indispensable tool for designers moving forward. If you want intricate details and sophisticated effects, slower models that can iterate more, might be best for you. The good news is, with real-time generators you can typically still increase the number of inference steps if you need to to get better quality. I envision this speed-quality trade off to vanish this year.

[ VIDEO HERE ]

Example Interactive generation for jewelry design

How to do real-time interactive generation in Playform:

Login to Playform

Click Create a Project or the + icon on mobile

Choose from the AI Apps the “AI Draw / Sketch an Image” App

Start prompting or (optionally) sketching !

Optionally, choose a style for the generation.

Optionally, adjust the advanced controls, most important is the slider to control the effect of the sketch. You can lower that slider to make the prompt more dominant on the result and avoid using sketch literally in the generated image.

Learn more about other sketching tools in Playform